Exp-lookit-images-audio Class

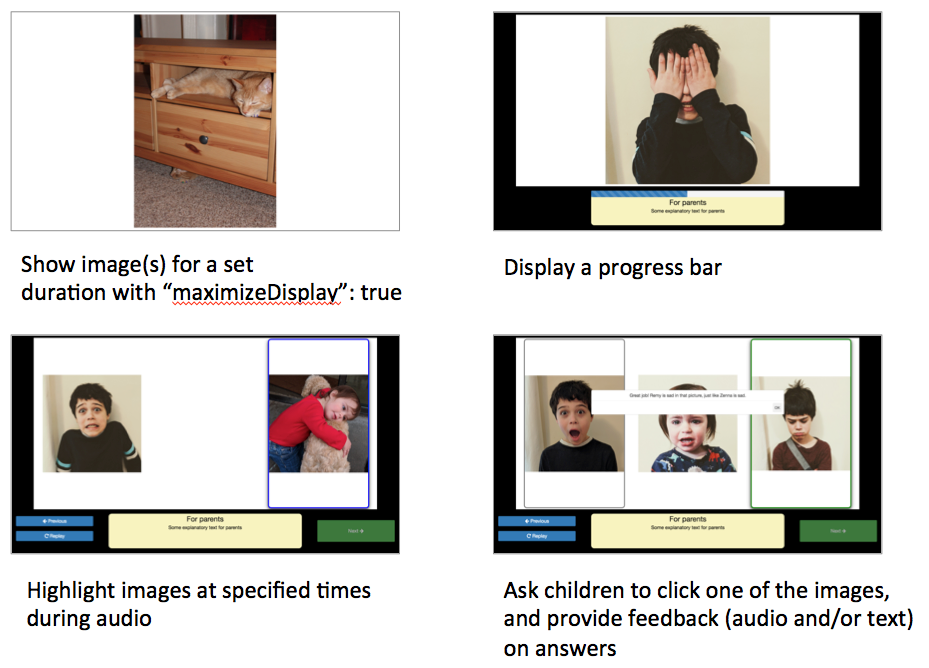

These docs have moved here.Frame to display image(s) and play audio, with optional video recording. Options allow customization for looking time, storybook, forced choice, and reaction time type trials, including training versions where children (or parents) get feedback about their responses.

This can be used in a variety of ways - for example:

-

Display an image for a set amount of time and measure looking time

-

Display two images for a set amount of time and play audio for a looking-while-listening paradigm

-

Show a "storybook page" where you show images and play audio, having the parent/child press 'Next' to proceed. If desired, images can appear and be highlighted at specific times relative to audio. E.g., the audio might say "This [image of Remy appears] is a boy named Remy. Remy has a little sister [image of Zenna appears] named Zenna. [Remy highlighted] Remy's favorite food is brussel sprouts, but [Zenna highlighted] Zenna's favorite food is ice cream. [Remy and Zenna both highlighted] Remy and Zenna both love tacos!"

-

Play audio asking the child to choose between two images by pointing or answering verbally. Show text for the parent about how to help and when to press Next.

-

Play audio asking the child to choose between two images, and require one of those images to be clicked to proceed (see "choiceRequired" option).

-

Measure reaction time as the child is asked to choose a particular option on each trial (e.g., a central cue image is shown first, then two options at a short delay; the child clicks on the one that matches the cue in some way)

-

Provide audio and/or text feedback on the child's (or parent's) choice before proceeding, either just to make the study a bit more interactive ("Great job, you chose the color BLUE!") or for initial training/familiarization to make sure they understand the task. Some images can be marked as the "correct" answer and a correct answer required to proceed. If you'd like to include some initial training questions before your test questions, this is a great way to do it.

In general, the images are displayed in a designated region of the screen with aspect

ratio 7:4 (1.75 times as wide as it is tall) to standardize display as much as possible

across different monitors. If you want to display things truly fullscreen, you can

use autoProceed and not provide parentText so there's nothing at the bottom, and then

set maximizeDisplay to true.

Webcam recording may be turned on or off; if on, stimuli are not displayed and audio is

not started until recording begins. (Using the frame-specific isRecording property

is good if you have a smallish number of test trials and prefer to have separate video

clips for each. For reaction time trials or many short trials, you will likely want

to use session recording instead - i.e. start the session recording before the first trial

and end on the last trial - to avoid the short delays related to starting/stopping the video.)

This frame is displayed fullscreen, but is not paused or otherwise disabled if the user leaves fullscreen. A button appears prompting the user to return to fullscreen mode.

Any number of images may be placed on the screen, and their position specified. (Aspect ratio will be the same as the original image.)

The examples below show a variety of usages, corresponding to those shown in the video.

image-1: Single image displayed full-screen, maximizing area on monitor, for 8 seconds.

image-2: Single image displayed at specified position, with 'next' button to move on

image-3: Image plus audio, auto-proceeding after audio completes and 4 seconds go by

image-4: Image plus audio, with 'next' button to move on

image-5: Two images plus audio question asking child to point to one of the images, demonstrating different timing of image display & highlighting of images during audio

image-6: Three images with audio prompt, family has to click one of two to continue

image-7: Three images with audio prompt, family has to click correct one to continue - audio feedback on incorrect answer

image-8: Three images with audio prompt, family has to click correct one to continue - text feedback on incorrect answer

"frames": {

"image-1": {

"kind": "exp-lookit-images-audio",

"images": [

{

"id": "cats",

"src": "two_cats.png",

"position": "fill"

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"autoProceed": true,

"doRecording": true,

"durationSeconds": 8,

"maximizeDisplay": true

},

"image-2": {

"kind": "exp-lookit-images-audio",

"images": [

{

"id": "cats",

"src": "three_cats.JPG",

"top": 10,

"left": 30,

"width": 40

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"autoProceed": false,

"doRecording": true,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

}

},

"image-3": {

"kind": "exp-lookit-images-audio",

"audio": "wheresremy",

"images": [

{

"id": "remy",

"src": "wheres_remy.jpg",

"position": "fill"

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"audioTypes": [

"mp3",

"ogg"

],

"autoProceed": true,

"doRecording": false,

"durationSeconds": 4,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

},

"showProgressBar": true

},

"image-4": {

"kind": "exp-lookit-images-audio",

"audio": "peekaboo",

"images": [

{

"id": "remy",

"src": "peekaboo_remy.jpg",

"position": "fill"

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"audioTypes": [

"mp3",

"ogg"

],

"autoProceed": false,

"doRecording": false,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

}

},

"image-5": {

"kind": "exp-lookit-images-audio",

"audio": "remyzennaintro",

"images": [

{

"id": "remy",

"src": "scared_remy.jpg",

"position": "left"

},

{

"id": "zenna",

"src": "love_zenna.jpg",

"position": "right",

"displayDelayMs": 1500

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"highlights": [

{

"range": [

0,

1.5

],

"imageId": "remy"

},

{

"range": [

1.5,

3

],

"imageId": "zenna"

}

],

"autoProceed": false,

"doRecording": true,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

}

},

"image-6": {

"kind": "exp-lookit-images-audio",

"audio": "matchremy",

"images": [

{

"id": "cue",

"src": "happy_remy.jpg",

"position": "center",

"nonChoiceOption": true

},

{

"id": "option1",

"src": "happy_zenna.jpg",

"position": "left",

"displayDelayMs": 2000

},

{

"id": "option2",

"src": "annoyed_zenna.jpg",

"position": "right",

"displayDelayMs": 2000

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"autoProceed": false,

"doRecording": true,

"choiceRequired": true,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

},

"canMakeChoiceBeforeAudioFinished": true

},

"image-7": {

"kind": "exp-lookit-images-audio",

"audio": "matchzenna",

"images": [

{

"id": "cue",

"src": "sad_zenna.jpg",

"position": "center",

"nonChoiceOption": true

},

{

"id": "option1",

"src": "surprised_remy.jpg",

"position": "left",

"feedbackAudio": "negativefeedback",

"displayDelayMs": 3500

},

{

"id": "option2",

"src": "sad_remy.jpg",

"correct": true,

"position": "right",

"displayDelayMs": 3500

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"autoProceed": false,

"doRecording": true,

"choiceRequired": true,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

},

"correctChoiceRequired": true,

"canMakeChoiceBeforeAudioFinished": false

},

"image-8": {

"kind": "exp-lookit-images-audio",

"audio": "matchzenna",

"images": [

{

"id": "cue",

"src": "sad_zenna.jpg",

"position": "center",

"nonChoiceOption": true

},

{

"id": "option1",

"src": "surprised_remy.jpg",

"position": "left",

"feedbackText": "Try again! Remy looks surprised in that picture. Can you find the picture where he looks sad, like Zenna?",

"displayDelayMs": 3500

},

{

"id": "option2",

"src": "sad_remy.jpg",

"correct": true,

"position": "right",

"feedbackText": "Great job! Remy is sad in that picture, just like Zenna is sad.",

"displayDelayMs": 3500

}

],

"baseDir": "https://www.mit.edu/~kimscott/placeholderstimuli/",

"autoProceed": false,

"doRecording": true,

"choiceRequired": true,

"parentTextBlock": {

"text": "Some explanatory text for parents",

"title": "For parents"

},

"correctChoiceRequired": true,

"canMakeChoiceBeforeAudioFinished": false

}

}

Item Index

Methods

- destroyRecorder

- destroySessionRecorder

- exitFullscreen

- hideRecorder

- makeTimeEvent

- onRecordingStarted

- onSessionRecordingStarted

- serializeContent

- setupRecorder

- showFullscreen

- showRecorder

- startRecorder

- startSessionRecorder

- stopRecorder

- stopSessionRecorder

- whenPossibleToRecordObserver

- whenPossibleToRecordSessionObserver

Properties

- assetsToExpand

- audio

- audioOnly

- audioTypes

- autoProceed

- autosave

- backgroundColor

- baseDir

- canMakeChoiceBeforeAudioFinished

- choiceRequired

- correctChoiceRequired

- displayFullscreen

- displayFullscreenOverride

- doRecording

- doUseCamera

- durationSeconds

- endSessionRecording

- fsButtonID

- fullScreenElementId

- generateProperties

- highlights

- images

- maximizeDisplay

- maxRecordingLength

- maxUploadSeconds

- pageColor

- parameters

- parentTextBlock

- recorder

- recorderElement

- recorderReady

- selectNextFrame

- sessionAudioOnly

- sessionMaxUploadSeconds

- showPreviousButton

- showProgressBar

- showReplayButton

- showWaitForRecordingMessage

- showWaitForUploadMessage

- startRecordingAutomatically

- startSessionRecording

- stoppedRecording

- videoId

- videoList

- videoTypes

- waitForRecordingMessage

- waitForRecordingMessageColor

- waitForUploadMessage

- waitForUploadMessageColor

- waitForWebcamImage

- waitForWebcamVideo

Data collected

Events

- clickImage

- dismissFeedback

- displayAllImages

- displayImage

- endTimer

- enteredFullscreen

- failedToStartAudio

- failedToStartImageAudio

- finishAudio

- hasCamAccess

- leftFullscreen

- nextFrame

- pauseVideo

- previousFrame

- recorderReady

- replayAudio

- sessionRecorderReady

- startAudio

- startImageAudio

- startSessionRecording

- startTimer

- stoppingCapture

- stopSessionRecording

- trialComplete

- unpauseVideo

- videoStreamConnection

Methods

destroyRecorder

()

destroySessionRecorder

()

exitFullscreen

()

hideRecorder

()

makeTimeEvent

-

eventName -

[extra]

Create the time event payload for a particular frame / event. This can be overridden to add fields to every event sent by a particular frame

Parameters:

Returns:

Event type, time, and any additional metadata provided

onRecordingStarted

()

onSessionRecordingStarted

()

serializeContent

-

eventTimings

Each frame that extends ExpFrameBase will send at least an array eventTimings,

a frame type, and any generateProperties back to the server upon completion.

Individual frames may define additional properties that are sent.

Parameters:

-

eventTimingsArray

Returns:

setupRecorder

-

element

Parameters:

-

elementNodeA DOM node representing where to mount the recorder

Returns:

showFullscreen

()

showRecorder

()

startRecorder

()

Returns:

startSessionRecorder

()

Returns:

stopRecorder

()

Returns:

stopSessionRecorder

()

Returns:

whenPossibleToRecordObserver

()

whenPossibleToRecordSessionObserver

()

Properties

audio

Object[]

Audio file to play at the start of this frame.

This can either be an array of {src: 'url', type: 'MIMEtype'} objects, e.g.

listing equivalent .mp3 and .ogg files, or can be a single string filename

which will be expanded based on baseDir and audioTypes values (see audioTypes).

Default: []

audioOnly

Number

Default: 0

audioTypes

String[]

['typeA', 'typeB'] and an audio source

is given as intro, the audio source will be

expanded out to

[

{

src: 'baseDir' + 'typeA/intro.typeA',

type: 'audio/typeA'

},

{

src: 'baseDir' + 'typeB/intro.typeB',

type: 'audio/typeB'

}

]

Default: ['mp3', 'ogg']

autoProceed

Boolean

Whether to proceed automatically when all conditions are met, vs. enabling next button at that point. If true: the next, previous, and replay buttons are hidden, and the frame auto-advances after ALL of the following happen (a) the audio segment (if any) completes (b) the durationSeconds (if any) is achieved (c) a choice is made (if required) (d) that choice is correct (if required) (e) the choice audio (if any) completes (f) the choice text (if any) is dismissed If false: the next, previous, and replay buttons (as applicable) are displayed. It becomes possible to press 'next' only once the conditions above are met.

Default: false

autosave

Number

private

Default: 1

backgroundColor

String

Color of background. See https://developer.mozilla.org/en-US/docs/Web/CSS/color_value for acceptable syntax: can use color names ('blue', 'red', 'green', etc.), or rgb hex values (e.g. '#800080' - include the '#')

Default: 'black'

baseDir

String

baseDir + img/. Any audio/video src values provided as

strings rather than objects with src and type will be

expanded out to baseDir/avtype/[stub].avtype, where the potential

avtypes are given by audioTypes and videoTypes.

baseDir should include a trailing slash

(e.g., http://stimuli.org/myexperiment/); if a value is provided that

does not end in a slash, one will be added.

Default: ''

canMakeChoiceBeforeAudioFinished

Boolean

Whether the participant can make a choice before audio finishes. (Only relevant

if choiceRequired is true.)

Default: false

choiceRequired

Boolean

Whether this is a frame where the user needs to click to select one of the images before proceeding.

Default: false

correctChoiceRequired

Boolean

[Only used if choiceRequired is true] Whether the participant has to select

one of the correct images before proceeding.

Default: false

displayFullscreenOverride

String

true to display this frame in fullscreen mode, even if the frame type

is not always displayed fullscreen. (For instance, you might use this to keep

a survey between test trials in fullscreen mode.)

Default: false

doRecording

Boolean

Whether to do webcam recording (will wait for webcam connection before starting audio or showing images if so)

doUseCamera

Boolean

Default: true

durationSeconds

Number

Minimum duration of frame in seconds. If set, then it will only be possible to proceed to the next frame after both the audio completes AND this duration is acheived.

Default: 0

endSessionRecording

Number

Default: false

fullScreenElementId

String

private

generateProperties

String

Function to generate additional properties for this frame (like {"kind": "exp-lookit-text"}) at the time the frame is initialized. Allows behavior of study to depend on what has happened so far (e.g., answers on a form or to previous test trials). Must be a valid Javascript function, returning an object, provided as a string.

Arguments that will be provided are: expData, sequence, child, pastSessions, conditions.

expData, sequence, and conditions are the same data as would be found in the session data shown

on the Lookit experimenter interface under 'Individual Responses', except that

they will only contain information up to this point in the study.

expData is an object consisting of frameId: frameData pairs; the data associated

with a particular frame depends on the frame kind.

sequence is an ordered list of frameIds, corresponding to the keys in expData.

conditions is an object representing the data stored by any randomizer frames;

keys are frameIds for randomizer frames and data stored depends on the randomizer

used.

child is an object that has the following properties - use child.get(propertyName)

to access:

additionalInformation: String; additional information field from child formageAtBirth: String; child's gestational age at birth in weeks. Possible values are "24" through "39", "na" (not sure or prefer not to answer), "<24" (under 24 weeks), and "40>" (40 or more weeks).birthday: Date objectgender: "f" (female), "m" (male), "o" (other), or "na" (prefer not to answer)givenName: String, child's given name/nicknameid: String, child UUIDlanguageList: String, space-separated list of languages child is exposed to (2-letter codes)conditionList: String, space-separated list of conditions/characteristics- of child from registration form, as used in criteria expression, e.g. "autism_spectrum_disorder deaf multiple_birth"

pastSessions is a list of previous response objects for this child and this study,

ordered starting from most recent (at index 0 is this session!). Each has properties

(access as pastSessions[i].get(propertyName)):

completed: Boolean, whether they submitted an exit surveycompletedConsentFrame: Boolean, whether they got through at least a consent frameconditions: Object representing any conditions assigned by randomizer framescreatedOn: Date objectexpData: Object consisting of frameId: frameData pairsglobalEventTimings: list of any events stored outside of individual frames - currently just used for attempts to leave the study earlysequence: ordered list of frameIds, corresponding to keys in expDataisPreview: Boolean, whether this is from a preview session (possible in the event this is an experimenter's account)

Example:

function(expData, sequence, child, pastSessions, conditions) {

return {

'blocks':

[

{

'text': 'Name: ' + child.get('givenName')

},

{

'text': 'Frame number: ' + sequence.length

},

{

'text': 'N past sessions: ' + pastSessions.length

}

]

};

}

(This example is split across lines for readability; when added to JSON it would need to be on one line.)

Default: null

highlights

Object[]

Array representing times when particular images should be highlighted. Each

element of the array should be of the form {'range': [3.64, 7.83], 'imageId': 'myImageId'}.

The two range values are the start and end times of the highlight in seconds,

relative to the audio played. The imageId corresponds to the id of an

element of images.

Highlights can overlap in time. Any that go longer than the audio will just be ignored/cut off.

One strategy for generating a bunch of highlights for a longer story is to annotate using Audacity and export the labels to get the range values.

Sub-properties:

images

Object[]

Array of images to display and information about their placement. For each

image, you need to specify src (image name/URL) and placement (either by

providing left/width/top values, or by using a position preset).

Everything else is optional! This is where you would say that an image should be shown at a delay

Sub-properties:

-

idStringunique ID for this image

-

srcStringURL of image source. This can be a full URL, or relative to baseDir (see baseDir).

-

altStringalt-text for image in case it doesn't load and for screen readers

-

leftNumberleft margin, as percentage of story area width. If not provided, the image is centered horizontally.

-

widthNumberimage width, as percentage of story area width. Note: in general only provide one of width and height; the other will be adjusted to preserve the image aspect ratio.

-

topNumbertop margin, as percentage of story area height. If not provided, the image is centered vertically.

-

heightNumberimage height, as percentage of story area height. Note: in general only provide one of width and height; the other will be adjusted to preserve the image aspect ratio.

-

positionStringone of 'left', 'center', 'right', 'fill' to use presets that place the image in approximately the left, center, or right third of the screen or to fill the screen as much as possible. This overrides left/width/top values if given.

-

nonChoiceOptionBoolean[Only used if

choiceRequiredis true] whether this should be treated as a non-clickable option (e.g., this is a picture of a girl, and the child needs to choose whether the girl has a DOG or a CAT) -

displayDelayMsNumberDelay at which to show the image after trial start (timing will be relative to any audio or to start of trial if no audio). Optional; default is to show images immediately.

-

feedbackAudioObject[][Only used if

choiceRequiredis true] Audio to play upon clicking this image. This can either be an array of {src: 'url', type: 'MIMEtype'} objects, e.g. listing equivalent .mp3 and .ogg files, or can be a single stringfilenamewhich will be expanded based onbaseDirandaudioTypesvalues (seeaudioTypes). -

feedbackTextString[Only used if

choiceRequiredis true] Text to display in a dialogue window upon clicking the image.

maximizeDisplay

Boolean

Whether to have the image display area take up the whole screen if possible. This will only apply if (a) there is no parent text and (b) there are no control buttons (next, previous, replay) because the frame auto-proceeds.

Default: false

maxRecordingLength

Number

Default: 7200

maxUploadSeconds

Number

Default: 5

pageColor

String

Color of area where images are shown, if different from overall background. Defaults to backgroundColor if one is provided. See https://developer.mozilla.org/en-US/docs/Web/CSS/color_value for acceptable syntax: can use color names ('blue', 'red', 'green', etc.), or rgb hex values (e.g. '#800080' - include the '#')

Default: 'white'

parameters

Object[]

An object containing values for any parameters (variables) to use in this frame.

Any property VALUES in this frame that match any of the property NAMES in parameters

will be replaced by the corresponding parameter value. For example, suppose your frame

is:

{

'kind': 'FRAME_KIND',

'parameters': {

'FRAME_KIND': 'exp-lookit-text'

}

}

Then the frame kind will be exp-lookit-text. This may be useful if you need

to repeat values for different frame properties, especially if your frame is actually

a randomizer or group. You may use parameters nested within objects (at any depth) or

within lists.

You can also use selectors to randomly sample from or permute

a list defined in parameters. Suppose STIMLIST is defined in

parameters, e.g. a list of potential stimuli. Rather than just using STIMLIST

as a value in your frames, you can also:

- Select the Nth element (0-indexed) of the value of

STIMLIST: (Will cause error ifN >= THELIST.length)

'parameterName': 'STIMLIST#N'

- Select (uniformly) a random element of the value of

STIMLIST:

'parameterName': 'STIMLIST#RAND'

- Set

parameterNameto a random permutation of the value ofSTIMLIST:

'parameterName': 'STIMLIST#PERM'

- Select the next element in a random permutation of the value of

STIMLIST, which is used across all substitutions in this randomizer. This allows you, for instance, to provide a list of possible images in yourparameterSet, and use a different one each frame with the subset/order randomized per participant. If moreSTIMLIST#UNIQparameters than elements ofSTIMLISTare used, we loop back around to the start of the permutation generated for this randomizer.

'parameterName': 'STIMLIST#UNIQ'

Default: {}

recorder

VideoRecorder

private

recorderReady

Boolean

private

selectNextFrame

String

Function to select which frame index to go to when using the 'next' action on this frame. Allows flexible looping / short-circuiting based on what has happened so far in the study (e.g., once the child answers N questions correctly, move on to next segment). Must be a valid Javascript function, returning a number from 0 through frames.length - 1, provided as a string.

Arguments that will be provided are:

frames, frameIndex, expData, sequence, child, pastSessions

frames is an ordered list of frame configurations for this study; each element

is an object corresponding directly to a frame you defined in the

JSON document for this study (but with any randomizer frames resolved into the

particular frames that will be used this time).

frameIndex is the index in frames of the current frame

expData is an object consisting of frameId: frameData pairs; the data associated

with a particular frame depends on the frame kind.

sequence is an ordered list of frameIds, corresponding to the keys in expData.

child is an object that has the following properties - use child.get(propertyName)

to access:

additionalInformation: String; additional information field from child formageAtBirth: String; child's gestational age at birth in weeks. Possible values are "24" through "39", "na" (not sure or prefer not to answer), "<24" (under 24 weeks), and "40>" (40 or more weeks).birthday: timestamp in format "Mon Apr 10 2017 20:00:00 GMT-0400 (Eastern Daylight Time)"gender: "f" (female), "m" (male), "o" (other), or "na" (prefer not to answer)givenName: String, child's given name/nicknameid: String, child UUID

pastSessions is a list of previous response objects for this child and this study,

ordered starting from most recent (at index 0 is this session!). Each has properties

(access as pastSessions[i].get(propertyName)):

completed: Boolean, whether they submitted an exit surveycompletedConsentFrame: Boolean, whether they got through at least a consent frameconditions: Object representing any conditions assigned by randomizer framescreatedOn: timestamp in format "Thu Apr 18 2019 12:33:26 GMT-0400 (Eastern Daylight Time)"expData: Object consisting of frameId: frameData pairsglobalEventTimings: list of any events stored outside of individual frames - currently just used for attempts to leave the study earlysequence: ordered list of frameIds, corresponding to keys in expData

Example that just sends us to the last frame of the study no matter what:

`"function(frames, frameIndex, frameData, expData, sequence, child, pastSessions) {return frames.length - 1;}"``

Default: null

sessionAudioOnly

Number

Default: 0

sessionMaxUploadSeconds

Number

Default: 10

showPreviousButton

Boolean

[Only used if not autoProceed] Whether to show a previous button to allow the participant to go to the previous frame

Default: true

showProgressBar

Boolean

[Only used if durationSeconds set] Whether to show a progress bar based on durationSeconds in the parent text area.

Default: false

showReplayButton

Boolean

[Only used if not autoProceed AND if there is audio] Whether to show a replay button to allow the participant to replay the audio

Default: false

showWaitForRecordingMessage

Boolean

Default: true

showWaitForUploadMessage

Boolean

Default: true

startRecordingAutomatically

Boolean

Default: false

startSessionRecording

Number

Default: false

stoppedRecording

Boolean

private

videoId

String

private

videoStream_<experimentId>_<frameId>_<sessionId>_timestampMS_RRR

where RRR are random numeric digits.

videoList

List

private

videoTypes

String[]

['typeA', 'typeB'] and a video source

is given as intro, the video source will be

expanded out to

[

{

src: 'baseDir' + 'typeA/intro.typeA',

type: 'video/typeA'

},

{

src: 'baseDir' + 'typeB/intro.typeB',

type: 'video/typeB'

}

]

Default: ['mp4', 'webm']

waitForRecordingMessage

Boolean

Default: 'Please wait... <br><br> starting webcam recording'

waitForRecordingMessageColor

Boolean

Default: 'white'

waitForUploadMessage

Boolean

Default: 'Please wait... <br><br> uploading video'

waitForUploadMessageColor

String

Default: 'white'

waitForWebcamImage

String

`baseDir/img/ if this frame otherwise supports use of baseDir`.

Default: ''

waitForWebcamVideo

String

`{'src': 'https://...', 'type': '...'}` objects (e.g. providing both

webm and mp4 versions at specified URLS) or a single string relative to `baseDir/<EXT>/` if this frame otherwise

supports use of `baseDir`.

Default: ''

Data keys collected

These are the fields that will be captured by this frame and sent back to the Lookit server. Each of these fields will correspond to one row of the CSV frame data for a given response - the row will havekey set to the data key name, and value set to the value for this response.

Equivalently, this data will be available in the exp_data field of the response JSON data.

audioPlayed

String

Source URL of audio played, if any. If multiple sources provided (e.g. mp4 and ogg versions) just the first is stored.

eventTimings

Ordered list of events captured during this frame (oldest to newest). Each event is

represented as an object with at least the properties

{'eventType': EVENTNAME, 'timestamp': TIMESTAMP}.

See Events tab for details of events that might be captured.

frameType

Type of frame: EXIT (exit survey), CONSENT (consent or assent frame), or DEFAULT (anything else)

generatedProperties

Any properties generated via a custom generateProperties function provided to this frame (e.g., a score you computed to decide on feedback). In general will be null.

images

Array

Array of images used in this frame [same as passed to this frame, but may reflect random assignment for this particular participant]

Events

clickImage

When one of the image options is clicked during a choice frame

displayAllImages

When images are displayed to participant (for images without any delay added)

endTimer

Timer for set-duration trial ends

enteredFullscreen

failedToStartAudio

When main audio cannot be started. In this case we treat it as if the audio was completed (for purposes of allowing participant to proceed)

failedToStartImageAudio

When image/feedback audio cannot be started. In this case we treat it as if the audio was completed (for purposes of allowing participant to proceed)

Event Payload:

-

imageIdString

finishAudio

When main audio segment finishes playing

leftFullscreen

nextFrame

Move to next frame

pauseVideo

previousFrame

Move to previous frame

recorderReady

replayAudio

When main audio segment is replayed

sessionRecorderReady

startAudio

When main audio segment starts playing

startSessionRecording

startTimer

Timer for set-duration trial begins

stoppingCapture

stopSessionRecording

trialComplete

Trial is complete and attempting to move to next frame; may wait for recording to catch up before proceeding.

unpauseVideo

videoStreamConnection

Event Payload:

-

statusStringstatus of video stream connection, e.g. 'NetConnection.Connect.Success' if successful